Every programming language has it’s own weakness but we still learned it and pretend it will never happened to us.

Moral of the story : JUST LEARN PROGRAMMING LANGUAGE THAT CAN MAKE YOU MORE MONEY NOT THE ONE YOU LIKE, BECAUSE YOU NEED MONEY“JavaScript” isn’t so bad with React + Next + Typescript + Lodash + …

If a chicken could code, it would probably work like JavaScript. This is accurate.

When I had a flock, for example, sometimes one would flip over a bucket onto itself and then decide it must be night and go to sleep.

if a chicken could code, it would use CHICKEN.

Oh this is actually a real thing I was rolling my eyes like “just show me the clicks and clucks in the code”

Like the cow one that’s just a sequence of differently-capitalised moos.

I wish I could go to sleep at any time of day with just a bucket.

Flying short distances and ability to expand your neck like 4x also looks cool.

What if it was on purpose?

I wouldn’t put it past them. The one I saw climb into the hay loft on an upright human ladder knew what it was doing. Ditto for the time they excavated out a secret base under the deck and started laying eggs inside.

Never a dull moment with those guys.

Keep an eye on em, everyone I know who interacts with chickens has said the same thing.

Granted that’s like one dude, but still. 😆

of there was proof that chickens could contribute to the Ecmascript standard I would probably stop being vegan tbf

if cows could be on the C++ committee i would eat nothing but hamburgers

Wait, do vegetables have good feelings or evil ones??

Even more evil, trust me, I lived with one once

I find the hardest part about eating vegetables is getting around the wheelchair.

Replace the bathtub with a cooking pot and you’ll have your vegetables slide in just like that.

Plop them on the bed first

Hol’ up a minute

Javascript and not Coq?

There is also PHP

You Coqsucker.

I’ve been programming in typescript recently, and can I say. I fucking hate JavaScript and typescript. It’s such a pain so much odd behaviors.

I like custom types and them being able to follow custom interfaces; it makes for great type safety that almost no other language can guarantee!

What I’m saying is I’m learning Rust.

Exactly

Lol name one outside of it’s well known equality rules that linters check for.

Also, name the language you think is better.

Because for those of us who have coded in languages that are actually bad, hearing people complain about triple equals signs for the millionth time seems pretty lame.

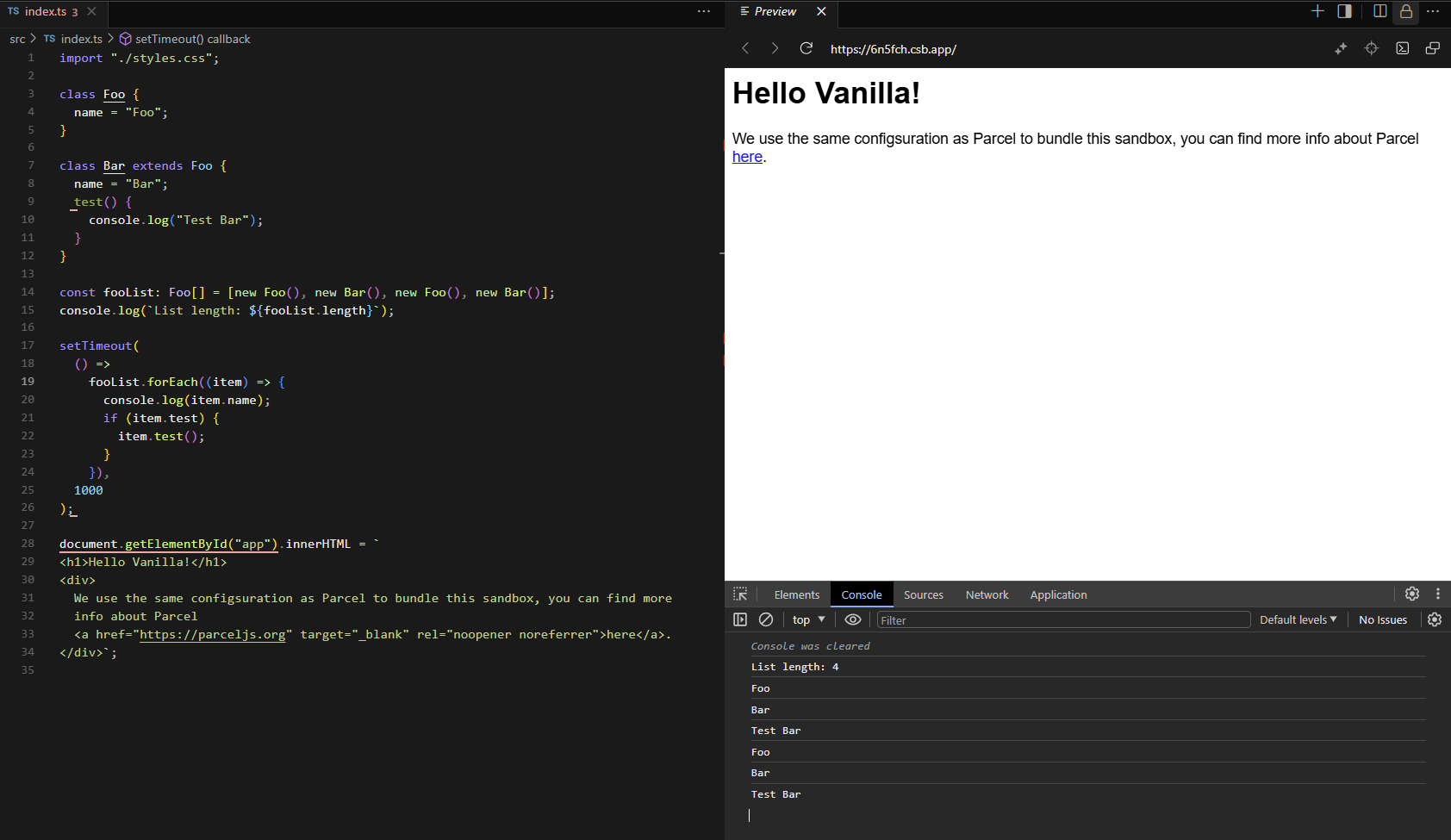

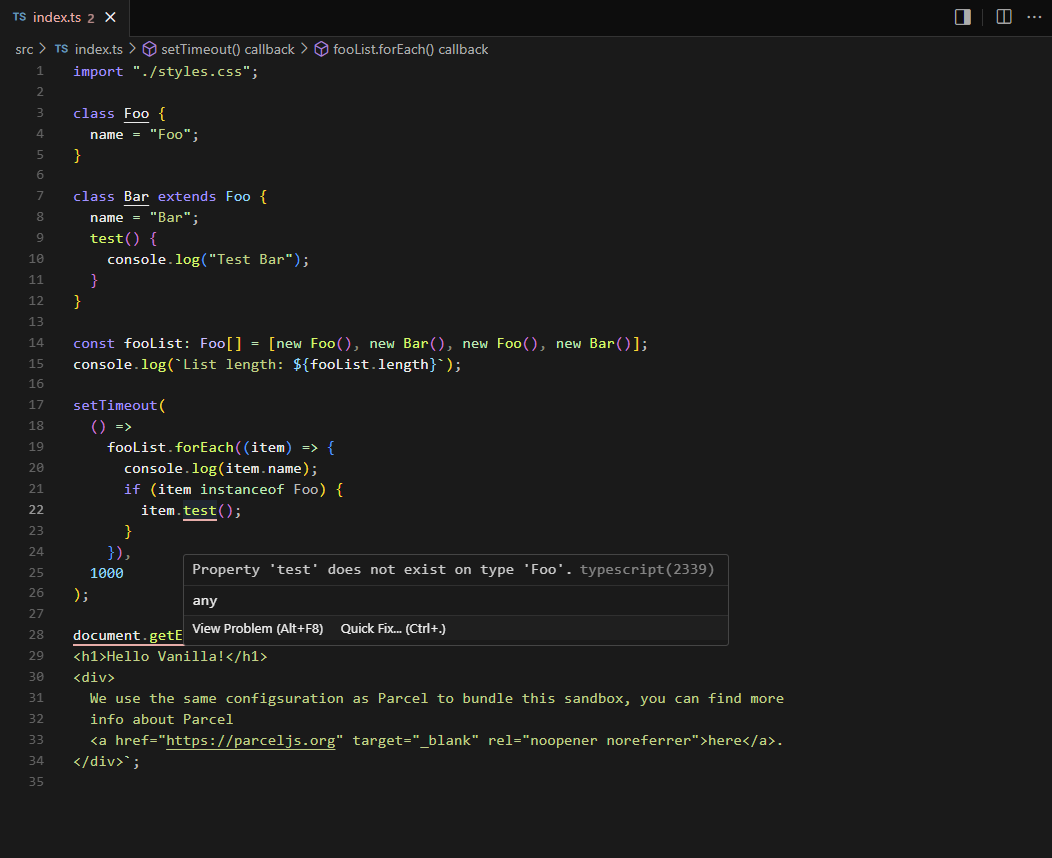

Recently I encountered an issue with “casting”. I had a class “foo” and a class “bar” that extended class foo. I made a list of class “foo” and added “bar” objects to the list. But when I tried use objects from “foo” list and cast them to bar and attempted to use a “bar” member function I got a runtime error saying it didn’t exists maybe this was user error but it doesn’t align with what I come to expect from languages.

I just feel like instead of slapping some silly abstraction on a language we should actually work on integrating a proper type safe language in its stead.

I think that might be user error as I can’t recreate that:

Yeah, you would get a runtime error calling that member without checking that it exists.

Because that object is of a type where that member may or may not exist. That is literally the exact same behaviour as Java or C#.

If I cast or type check it to make sure it’s of type Bar rather than checking for the member explicitly it still works:

And when I cast it to Foo it throws a compile time error, not a runtime error:

I think your issues may just like in the semantics of how Type checking works in JavaScript / Typescript.

@masterspace “Undeclared variable” is a runtime error.

Perl.

A) yes, that’s how interpreted languages work.

B) the very simple, long established way to avoid it, is to configure your linter:

https://eslint.org/docs/latest/rules/no-undef

I haven’t used Perl though, what do you like better about it?

I haven’t used Perl though, what do you like better about it?

“Undeclared variable” is a compile-time error.

K, well configure your linter the way a professional Typescript environment should have it configured, and it will be there too. Not to be rude but not having a linter configured and running is a pretty basic issue. If you configured your project with Vite or any other framework it would have this configured OOTB.

Not to be rude but not having a linter configured and running is a pretty basic issue.

Yeah, if you’re a C programmer in the 1980s, maybe. But it’s 2006 now and compilers are able to do basic sanity checks all on their own.

Interpreted languages don’t have compilers, and one of the steps that compilers do is LINTING. You’re literally complaining about not configuring your compiler properly and blaming it on the language.

JavaScript only has a single number type, so 0.0 is the same as 0. Thus when you are sending a JS object as JSON, in certain situations it will literally change 0.0 to 0 for you and send that instead (same with any number that has a zero decimal). This will cause casting errors in other languages when they attempt to deserialize ints into doubles or floats.

It will cause casting errors in other languages that have badly written, non-standards compliant, JSON parsers, as 1 and 1.0 being the same is part of the official JSON ISO standard and has been for a long time: https://json-schema.org/understanding-json-schema/reference/numeric

JSON schema is not a standard lol. 😂 it especially isn’t a standard across languages. And it most definitely isn’t an ISO standard 🤣. JSON Data Interchange Format is a standard, but it wasn’t published until 2017, and it doesn’t say anything about 1.0 needs to auto cast to 1 (because that would be fucking idiotic). https://datatracker.ietf.org/doc/html/rfc8259

JSON Schema does have a draft in the IETF right now, but JSON Schema isn’t a specification of the language, it’s for defining a schema for your code. https://datatracker.ietf.org/doc/draft-handrews-json-schema/

Edit: and to add to that, JavaScript has a habit of declaring their dumb bugs as “it’s in the spec” years after the fact. Just because it’s in the spec doesn’t mean it’s not a bug and just because it’s in the spec doesn’t mean everywhere else is incorrect.

Yes, it is:

https://www.iso.org/standard/71616.html

Page 3 of INTERNATIONAL STANDARD

- ISO/IEC 21778 - Information technology — The JSON data interchange syntax

8 Numbers A number is a sequence of decimal digits with no superfluous leading zero. It may have a preceding minus sign (U+002D). It may have a fractional part prefixed by a decimal point (U+002E). It may have an exponent, prefixed by e (U+0065) or E (U+0045) and optionally + (U+002B) or – (U+002D). The digits are the code points U+0030 through U+0039.

You clearly do not understand the difference between a specification and a schema. On top of that you clearly don’t even understand what you wrote down doesn’t even agree with you. No where in that spec does it say anything about auto casting a double or float to an int.

That confirms exactly what tyler said. I’m not sure if you’re misreading replies to your posts or misreading your own posts, but I think you’re really missing the point.

Let’s go through it point by point.

-

tyler said “JSON Schema is not an ISO standard”. As far as I can tell, this is true, and you have not presented any evidence to the contrary.

-

tyler said “JSON Data Interchange Format is a standard, but it wasn’t published until 2017, and it doesn’t say anything about 1.0 needs to auto cast to 1”. This is true and confirmed by your own link, which is a standard from 2017 that declares compatibility with RFC 8259 (tyler’s link) and doesn’t say anything about autocasting 1.0 to 0 (because that’s semantics, and your ISO standard only describes syntax).

-

tyler said “JSON Schema isn’t a specification of the language, it’s for defining a schema for your code”, which is true (and you haven’t disputed it).

Your response starts with “yes it is”, but it’s unclear what part you’re disagreeing with, because your own link agrees with pretty much everything tyler said.

Even the part of the standard you’re explicitly quoting does not say anything about 1.0 and 1 being the same number.

Why did you bring up JSON Schema (by linking to their website) in the first place? Were you just confused about the difference between JSON in general and JSON Schema?

-

JavaScript bad!

Hahaha! True

Our mass media can incite fear of chickens, pigs, and cattle. Then their existence itself can be defined as a terrorist act. We’ll redefine vegan to mean only those that eat terrorists to save the other animals. Actual vegans can call themselves “vegetablers”. Nothing changes and everyone feels good because if they don’t feel good then they’re not human.

If the existence is a terroristic act how do you call farmers who breed these creatures on purpose? I guess the new ‘vegans’ could then eat the very last generation of terroristic animals and then everyone needs to go ‘vegetabler’. I guess that doesn’t sound too bad to those that are vegetabler on purpose. ;)

If the existence is a terroristic act how do you call farmers who breed these creatures on purpose?

Capitalists.

Plants are alive!

why do beards make men shitheels ?

even santa only gives the good stuff to rich kids

Look at those butthurt downvotes, haha. Currently 2 - 4.

Let me reach around mine to give you an upvote.

Honestly the meme of ‘JavaScript bad’ is so tired and outdated it’s ridiculous. It made sense 14 years ago before invention of Typescript and ES5/6+, but these days it basically just shows ignorance or the blind regurgitation of a decade old meme.

Typescript is hands down the most pleasant language to work in, followed closely by the more modern compiled ones like Go, Swift, C#, and miles ahead of widely used legacy ones like Java, and PHP etc. and the white space, untyped, nightmare that is python.

I’m like 99% sure that it’s just because JavaScript / Typescript is so common that for anyone who doesn’t start with it, it’s the second language they learn, and at that point they’re just whiny and butthurt about learning a new language.

Nothing says language of the year better than a language that needs to be compiled to an inefficient interpreted language made for browsers and then grossly stuffed into a stripped out Chrome engine to serve as backend. All filled with thousands of dependencies badly managed through npm to overcome the lack of a standard library actually useful for backend stuff.

Oh I’m sorry, I was waiting for you to name a more successful cross platform development language and framework?

Oh, you’re listing Java, and Xamarin, and otherwise rewriting the same app 4 times? Cool beans bro. Great development choices you’ve made.

All filled with thousands of dependencies badly managed through npm to overcome the lack of a standard library actually useful for backend stuff.

Bruh, this is the dumbest fucking complaint. “Open source language relies on open source packages, OMG WHAT?!?!!”

Please do go ahead and show me the OOTB OAuth library that comes with your backend language of choice, or kindly stfu about everything you need being provided by the language and not by third party libraries.

As a TypeScript dev, TypeScript is not pleasant to work with at all. I don’t love Java or C# but I’d take them any day of the week over anything JS-based. TypeScript provides the illusion of type safety without actually providing full type safety because of one random library whose functionality you depend on that returns and takes in

anyinstead of using generic types. Unlike pretty much any other statically typed language, compiled TypeScript will do nothing to ensure typing at runtime, and won’t error at all if something else gets passed in until you try to use a method or field that it doesn’t have. It will just fail silently unless you add type checking to your functions/methods that are already annotated as taking in your desired types. Languages like Java and C# would throw an exception immediately when you try to cast the value, and languages like Rust and Go wouldn’t even compile unless you either handle the case or panic at that exact location. Pretty much the only language that handles this worse is Python (and maybe Lua? I don’t really know much about Lua though).TLDR; TypeScript in theory is very different from TypeScript in practice and that difference makes it very annoying to use.

Bonus meme:

I have next to no experience with TypeScript, but want to make a case in defence of Python: Python does not pretend to have any kind of type safety, and more or less actively encourages duck typing.

Now, you can like or dislike duck typing, but for the kind of quick and dirty scripting or proof of concept prototyping that I think Python excels at, duck typing can help you get the job done much more efficiently.

In my opinion, it’s much more frustrating to work with a language that pretends to be type safe while not being so.

Because of this, I regularly turn off the type checking on my python linter, because it’s throwing warnings about “invalid types”, due to incomplete or outdated docs, when I know for a fact that the function in question works with whatever type I’m giving it. There is really no such thing as an “invalid type” in Python, because it’s a language that does not intend to be type-safe.

That’s entirely fair for the usecase of a small script or plugin, or even a small website. I’d quickly get annoyed with Python if I had to use it for a larger project though.

TypeScript breaks down when you need it for a codebase that’s longer than a few thousand lines of code. I use pure JavaScript in my personal website and it’s not that bad. At work where the frontend I work on has 20,000 lines of TypeScript not including the HTML files, it’s a massive headache.

I wholeheartedly agree: In my job, I develop mathematical models which are implemented in Fortran/C/C++, but all the models have a Python interface. In practice, we use Python as a “front end”. That is: when running the models to generate plots or tables, or whatever, that is done through Python, because plotting and file handling is quick and easy in Python.

I also do quite a bit of prototyping in Python, where I quickly want to throw something together to check if the general concept works.

We had one model that was actually implemented in Python, and it took less than a year before it was re-implemented in C++, because nobody other than the original dev could really use it or maintain it. It became painfully clear how much of a burden python can be once you have a code base over a certain size.

Pretty much the only language that handles this worse is Python (and maybe Lua? I don’t really know much about Lua though).

This is the case for literally all interpreted languages, and is an inherent part of them being interpreted.

However, while I recognize that can happen, I’ve literally never come across it in my time working on Typescript. I’m not sure what third party libraries you’re relying on but the most popular OAuth libraries, ORMs, frontend component libraries, state management libraries, graphing libraries, etc. are all written in pure Typescript these days.

This is the case for literally all interpreted languages, and is an inherent part of them being interpreted.

It’s actually the opposite. The idea of “types” is almost entirely made up by compilers and runtime environments (including interpreters). The only thing assembly instructions actually care about is how many bits a binary value has and whether or not it should be stored as a floating point, integer, or pointer (I’m oversimplifying here but the point still stands). Assembly instructions only care about the data in the registers (or an address in memory) that they operate on.

There is no part of an interpreted language that requires it to not have any type-checking. In fact, many languages use runtime environments for better runtime type diagnostics (e.g. Java and C#) that couldn’t be enforced at runtime in a purely compiled language like C or C++. Purely compiled binaries are pretty much the only environments where automatic runtime type checking can’t be added without basically recreating a runtime environment in the binary (like what languages like go do). The only interpreter that can’t have type-checking is your physical CPU.

If you meant that it is inherent to the language in that it was intended, you could make the case that for smaller-scale languages like bash, Lua, and some cases Python, that the dynamic typing makes it better. Working with large, complex frontends is not one of those cases. Even if this was an intentional feature of JavaScript, the existence of TypeScript at all proves it was a bad one.

However, while I recognize that can happen, I’ve literally never come across it in my time working on Typescript. I’m not sure what third party libraries you’re relying on but the most popular OAuth libraries, ORMs, frontend component libraries, state management libraries, graphing libraries, etc. are all written in pure Typescript these days.

This next example doesn’t directly return

any, but is more ubiquitous than the admittedly niche libraries the code I work on depends on: Many HTTP request services in TypeScript will fill fields in as undefined if they’re missing, even if the typing shouldn’t allow for that because that type requirement doesn’t actually exist at runtime. Languages like Kotlin, C#, and Rust would all error because the deserialization failed when something that shouldn’t be considered nullable had an empty value. Java might also have options for this depending on the serialization library used.

That’s just what I’d expect an evil chicken to say.

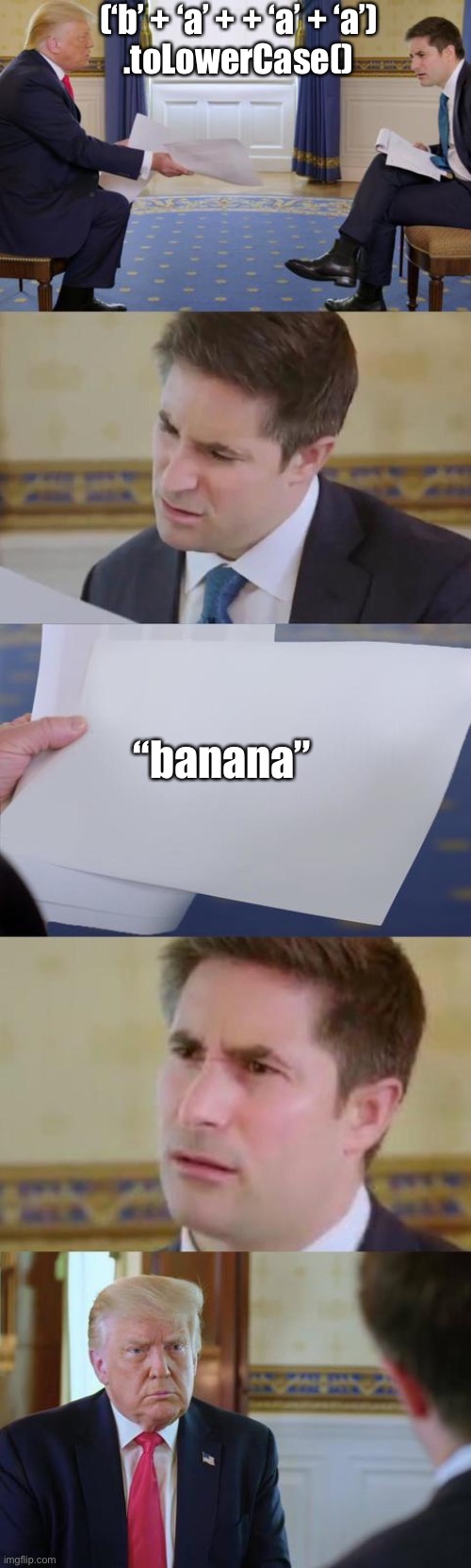

I learned today, that in JavaScript

[2,-2,6,-7].sort()results in

[-2,-7,2,6]

WTF is it casting it to string or something?

You can bet your pants it does!

This 💩 is 🍌s

baNaNas

Few people use just Typescript, though - there’s always dangerously exposed native libraries in the mix.

I would somewhat disagree. These days virtually every popular library on npm is pure typescript and every new project I see at a company is pure typescript, with only legacy migrations of old systems still mixing the two.

I’m not involved enough to really comment on that, but it’s not a 14 year old joke as much as a 1 or 2 year old joke if so.

Glad to hear it’s taking off. Hopefully browsers migrate to supporting it natively and depreciating JavaScript next.

Typescript is hands down the most pleasant language to work in

Agreed. But doesn’t make “JavaScript bad” any less true…